1. About the Documentation

This section provides a brief overview of Reactor reference documentation. You do not need to read this guide in a linear fashion. Each piece stands on its own, though they often refer to other pieces.

1.1. Latest Version & Copyright Notice

The Reactor reference guide is available as HTML documents. The latest copy is available at https://projectreactor.io/docs/core/release/reference/index.html

Copies of this document may be made for your own use and for distribution to others, provided that you do not charge any fee for such copies and further provided that each copy contains this Copyright Notice, whether distributed in print or electronically.

1.2. Contributing to the Documentation

The reference guide is written in Asciidoc, and you can find its sources at https://github.com/reactor/reactor-core/tree/master/docs/asciidoc.

If you have an improvement or a suggestion, we will be happy to get a pull request from you!

We recommend that you check out a local copy of the repository so that you can

generate the documentation by running the asciidoctor gradle task and checking the

rendering. Some of the sections rely on included files, so GitHub rendering is

not always complete.

| To facilitate documentation edits, most sections have a link at the end that opens an edit UI directly on GitHub for the main source file for that section. These links are only present in the HTML5 version of this reference guide. They look like the following: Suggest Edit to About the Documentation. |

1.3. Getting Help

You can reach out for help in several ways with Reactor:

-

Get in touch with the community on Gitter.

-

Ask a question on stackoverflow.com at

project-reactor. -

Report bugs in Github issues. We closely monitor the following repositories: reactor-core (which covers the essential features) and reactor-addons (which covers reactor-test and adapters issues).

| All of Reactor is open source, including this documentation. If you find problems with the docs or if you want to improve them, please get involved. |

1.4. Where to Go from Here

-

如果你喜欢直接开始代码,请跳转到 Getting Started

-

如果你第一次接触 reactive 编程, 你应该从 Introduction to Reactive Programming 开始.

-

如果你比较熟悉 Reactor 相关感念,只是想为工作中找些正确到方法,但是对用哪些运算符有不是很了解,你可以访问 Which operator do I need? 附录.

-

为了更深入对了解 Reactor 核心功能, 点击 Reactor Core Features 学习相关内容:

-

更多对学习了解 Reactor’s 反应类型,在

Flux, an Asynchronous Sequence of 0-N Items 和Mono, an Asynchronous 0-1 Result 章节中. -

如何选中线程上下文去使用 a scheduler.

-

如何捕获处理错误 Handling Errors 章节.

-

-

Unit 单元测试? 是以通过

reactor-test项目做到! 查看 Testing. -

Programmatically creating a sequence 学习跟多高级的方法去创建 reactive 源码.

-

其他高级主题都在 Advanced Features and Concepts.

2. Getting Started

本章节都内容信息将帮助大家更好的学习理解Reactor,主要包括以下小节内容

2.1. Introducing Reactor

Reactor 是一个给予JVM实现的完全非堵塞的 reactive 编程基础, 具有高效的 demand 管理 (以 "`backpressure`"的形式管理). 它们直接与Java 8 的

functional APIs 就行了整合, 特别整合了 CompletableFuture, Stream, and

Duration. 提供了一系列的可组合的异步 APIs — Flux (为了 [N] 元素) and

Mono (为了 [0|1] 元素) — 并大量的实现了

Reactive Streams 规范要求.

Reactor 还支持与给予`reactor-netty` 的项目进行非堵塞的进程间的通信 . 适用于微服务架构, Reactor Netty 为 HTTP (包括 Websockets), TCP, and UDP 提供了支持 backpressure 的网络引擎. Reactive 的编码与解码方式是完全支持.

2.2. Prerequisites

Reactor Core runs on Java 8 and above.

It has a transitive dependency on org.reactivestreams:reactive-streams:1.0.3.

|

Android Support

|

2.3. Understanding the BOM

Reactor 3 uses a BOM (Bill of Materials) model (since reactor-core 3.0.4, with the Aluminium release train).

This curated list groups artifacts that are meant to work well together, providing

the relevant versions despite potentially divergent versioning schemes in these artifacts.

The BOM is itself versioned, using a release train scheme with a codename followed by a qualifier. The following list shows a few examples:

Aluminium-RELEASE Californium-BUILD-SNAPSHOT Aluminium-SR1 Bismuth-RELEASE Californium-SR32

The codenames represent what would traditionally be the MAJOR.MINOR number. They (mostly) come from the Periodic Table of Elements, in increasing alphabetical order.

The qualifiers are (in chronological order):

-

BUILD-SNAPSHOT: Builds for development and testing. -

M1..N: Milestones or developer previews. -

RELEASE: The first GA (General Availability) release in a codename series. -

SR1..N: The subsequent GA releases in a codename series — equivalent to a PATCH number. (SR stands for “Service Release”).

2.4. Getting Reactor

As mentioned earlier, the easiest way to use Reactor in your core is to use the BOM and add the relevant dependencies to your project. Note that, when you add such a dependency, you must omit the version so that the version gets picked up from the BOM.

However, if you want to force the use of a specific artifact’s version, you can specify it when adding your dependency, as you usually would. You can also forgo the BOM entirely and specify dependencies by their artifact versions.

2.4.1. Maven Installation

Maven natively supports the BOM concept. First, you need to import the BOM by

adding the following snippet to your pom.xml:

<dependencyManagement> (1)

<dependencies>

<dependency>

<groupId>io.projectreactor</groupId>

<artifactId>reactor-bom</artifactId>

<version>Bismuth-RELEASE</version>

<type>pom</type>

<scope>import</scope>

</dependency>

</dependencies>

</dependencyManagement>| 1 | Notice the dependencyManagement tag. This is in addition to the regular

dependencies section. |

If the top section (dependencyManagement) already exists in your pom, add only the contents.

Next, add your dependencies to the relevant reactor projects, as usual, except without a

<version>, as follows:

<dependencies>

<dependency>

<groupId>io.projectreactor</groupId>

<artifactId>reactor-core</artifactId> (1)

(2)

</dependency>

<dependency>

<groupId>io.projectreactor</groupId>

<artifactId>reactor-test</artifactId> (3)

<scope>test</scope>

</dependency>

</dependencies>| 1 | Dependency on the core library. |

| 2 | No version tag here. |

| 3 | reactor-test provides facilities to unit test reactive streams. |

2.4.2. Gradle Installation

Prior to version 5.0, Gradle has no core support for Maven BOMs, but you can use Spring’s gradle-dependency-management plugin.

First, apply the plugin from the Gradle Plugin Portal, as follows:

plugins {

id "io.spring.dependency-management" version "1.0.7.RELEASE" (1)

}| 1 | as of this writing, 1.0.7.RELEASE is the latest version of the plugin. Check for updates. |

Then use it to import the BOM, as follows:

dependencyManagement {

imports {

mavenBom "io.projectreactor:reactor-bom:Bismuth-RELEASE"

}

}Finally add a dependency to your project, without a version number, as follows:

dependencies {

implementation 'io.projectreactor:reactor-core' (1)

}| 1 | There is no third : separated section for the version. It is taken from

the BOM. |

Since Gradle 5.0, you can use the native Gradle support for BOMs:

dependencies {

implementation platform('io.projectreactor:reactor-bom:Bismuth-RELEASE')

implementation 'io.projectreactor:reactor-core' (1)

}| 1 | There is no third : separated section for the version. It is taken from

the BOM. |

2.4.3. Milestones and Snapshots

Milestones and developer previews are distributed through the Spring Milestones repository rather than Maven Central. To add it to your build configuration file, use the following snippet:

<repositories>

<repository>

<id>spring-milestones</id>

<name>Spring Milestones Repository</name>

<url>https://repo.spring.io/milestone</url>

</repository>

</repositories>For Gradle, use the following snippet:

repositories {

maven { url 'https://repo.spring.io/milestone' }

mavenCentral()

}Similarly, snapshots are also available in a separate dedicated repository, as the following example show:

<repositories>

<repository>

<id>spring-snapshots</id>

<name>Spring Snapshot Repository</name>

<url>https://repo.spring.io/snapshot</url>

</repository>

</repositories>repositories {

maven { url 'https://repo.spring.io/snapshot' }

mavenCentral()

}3. Introduction to Reactive Programming

Reactor 是反应式编程范例的实现,可以总结如下:

Reactive 编程是一种异步编程范式,它涉及数据流和变化的传播. 现在可以通过所采用的编程语言轻松表达静态(例如数组)或动态(例如事件发射器)数据流。

As a first step in the direction of reactive programming, Microsoft created the Reactive

Extensions (Rx) library in the .NET ecosystem. Then RxJava implemented reactive

programming on the JVM. As time went on, a standardization for Java emerged through the

Reactive Streams effort, a specification that defines a set of interfaces and

interaction rules for reactive libraries on the JVM. Its interfaces have been

integrated into Java 9 under the Flow class.

作为响应式编程方向上的第一步,Microsoft在.NET生态系统中创建了响应式扩展(Rx)库。 然后RxJava在JVM上实现了反应式编程。随着时间的流逝,通过Reactive Streams的努力出现了Java的标准化, 该规范定义了JVM上的响应库的一组接口和交互规则。它的接口已集成到了Java 9中的Flow类下。

The reactive programming paradigm is often presented in object-oriented languages as an

extension of the Observer design pattern. You can also compare the main reactive streams

pattern with the familiar Iterator design pattern, as there is a duality to the

Iterable-Iterator pair in all of these libraries. One major difference is that, while

an Iterator is pull-based, reactive streams are push-based.

反应式编程范例通常以面向对象的语言表示,像是一个Observer设计模式的扩展。 您还可以将主要的反应流模式与熟悉的Iterator设计模式进行比较,因为所有这些库中的Iterable- Iterator对都有双重性 。 一个主要的区别是,虽然Iterator是基于pull的,但是反应流却是基于push的。

Using an iterator is an imperative programming pattern, even though the method of

accessing values is solely the responsibility of the Iterable. Indeed, it is up to the

developer to choose when to access the next() item in the sequence. In reactive

streams, the equivalent of the above pair is Publisher-Subscriber. But it is the

Publisher that notifies the Subscriber of newly available values as they come, and

this push aspect is the key to being reactive. Also, operations applied to pushed values

are expressed declaratively rather than imperatively: The programmer expresses the logic

of the computation rather than describing its exact control flow.

使用迭代器是命令式编程模式,即使访问值的方法仅由负责Iterable。 实际上,由开发人员决定何时选择next()序列中的项目。 在反应流中,上述对的等价物为Publisher-Subscriber。 但是,当 Publisher新可用值出现时,正是通知订户,而这一推动方面是做出反应的关键。 同样,应用于推入值的操作以声明方式而不是命令方式表示:程序员表示计算的逻辑,而不是描述其确切的控制流程。

In addition to pushing values, the error-handling and completion aspects are also covered

in a well defined manner. A Publisher can push new values to its Subscriber (by

calling onNext) but can also signal an error (by calling onError) or completion (by

calling onComplete). Both errors and completion terminate the sequence. This can

be summed up as follows:

除了推送值之外,还以明确定义的方式涵盖了错误处理和完成方面。 A Publisher可以Subscriber(通过调用onNext)将新值推入其值,但也可以发出错误信号(调用onError)或完成信号(通过调用onComplete)。 错误和完成都会终止序列。可以总结如下:

onNext x 0..N [onError | onComplete]This approach is very flexible. The pattern supports use cases where there is no value, one value, or n values (including an infinite sequence of values, such as the continuing ticks of a clock).

这种方法非常灵活。该模式支持没有值,一个值或n个值(包括无限个有值序列,例如时钟的连续滴答声)的用例。

But why do we need such an asynchronous reactive library in the first place?

但是为什么我们首先需要这样的异步反应式库?

3.1. Blocking Can Be Wasteful

Modern applications can reach huge numbers of concurrent users, and, even though the capabilities of modern hardware have continued to improve, performance of modern software is still a key concern.

现代应用程序可以吸引大量的并发用户,即使现代硬件的功能不断提高,现代软件的性能仍然是关键问题。

There are, broadly, two ways one can improve a program’s performance:

-

parallelize to use more threads and more hardware resources.

-

seek more efficiency in how current resources are used.

广义上讲,有两种方法可以提高程序的性能:

并行使用更多线程和更多硬件资源。

在使用现有资源方面寻求更高的效率。

Usually, Java developers write programs by using blocking code. This practice is fine until there is a performance bottleneck. Then it is time to introduce additional threads, running similar blocking code. But this scaling in resource utilization can quickly introduce contention and concurrency problems.

通常,Java开发人员通过使用阻塞代码来编写程序。除非存在性能瓶颈,否则这种做法很好。 然后是时候引入其他线程,运行类似的阻塞代码了。但是这种资源利用的扩展会迅速引入竞争和并发问题。

Worse still, blocking wastes resources. If you look closely, as soon as a program involves some latency (notably I/O, such as a database request or a network call), resources are wasted because threads (possibly many threads) now sit idle, waiting for data.

更糟糕的是,阻塞会浪费资源。 如果仔细观察,程序一旦遇到一些延迟(特别是I / O,例如数据库请求或网络调用),就会浪费资源, 因为线程(可能有很多线程)现在处于空闲状态,等待数据。

So the parallelization approach is not a silver bullet. It is necessary to access the full power of the hardware, but it is also complex to reason about and susceptible to resource wasting.

因此,并行化方法不是灵丹妙药。有必要访问硬件的全部功能,但是推理和资源浪费也很复杂。

3.2. Asynchronicity to the Rescue?

The second approach mentioned earlier, seeking more efficiency, can be a solution to the resource wasting problem. By writing asynchronous, non-blocking code, you let the execution switch to another active task that uses the same underlying resources and later comes back to the current process when the asynchronous processing has finished.

前面提到的第二种方法,寻求更高的效率,可以解决资源浪费的问题。 通过编写异步的非阻塞代码,您可以将执行切换到使用相同基础资源的另一个活动任务, 并在异步处理完成后返回到当前进程。

But how can you produce asynchronous code on the JVM? Java offers two models of asynchronous programming:

但是如何在JVM上生成异步代码?Java提供了两种异步编程模型:

-

Callbacks: Asynchronous methods do not have a return value but take an extra

callbackparameter (a lambda or anonymous class) that gets called when the result is available. A well known example is Swing’sEventListenerhierarchy. -

Futures: Asynchronous methods immediately return a

Future<T>. The asynchronous process computes aTvalue, but theFutureobject wraps access to it. The value is not immediately available, and the object can be polled until the value is available. For instance, anExecutorServicerunningCallable<T>tasks useFutureobjects. -

Callbacks: 异步方法没有返回值,但带有一个额外的 callback参数(lambda或匿名类),该参数在结果可用时被调用。一个著名的例子是Swing的EventListener层次结构.

-

Futures: 异步方法立即返回Future<T>。异步过程计算一个T值,但是Future对象包装了对其的访问。该值不是立即可用的,并且可以轮询该对象,直到该值可用为止。例如,ExecutorService正在运行的Callable<T>任务使用Future对象.

Are these techniques good enough? Not for every use case, and both approaches have limitations.

这些技术够好吗?并非针对每个用例,这两种方法都有局限性。

Callbacks are hard to compose together, quickly leading to code that is difficult to read and maintain (known as “Callback Hell”).

回调很难组合在一起,迅速导致难以阅读和维护的代码(称为“回调地狱”)。

Consider an example: showing the top five favorites from a user on the UI or suggestions if she does not have a favorite. This goes through three services (one gives favorite IDs, the second fetches favorite details, and the third offers suggestions with details), as follows:

考虑一个示例:在用户界面上显示用户的前五个收藏夹,如果没有收藏夹则显示建议。这需要三项服务(一项提供喜欢的ID,第二项获取喜欢的详细信息,第三项提供带有详细信息的建议),如下所示:

userService.getFavorites(userId, new Callback<List<String>>() { (1)

public void onSuccess(List<String> list) { (2)

if (list.isEmpty()) { (3)

suggestionService.getSuggestions(new Callback<List<Favorite>>() {

public void onSuccess(List<Favorite> list) { (4)

UiUtils.submitOnUiThread(() -> { (5)

list.stream()

.limit(5)

.forEach(uiList::show); (6)

});

}

public void onError(Throwable error) { (7)

UiUtils.errorPopup(error);

}

});

} else {

list.stream() (8)

.limit(5)

.forEach(favId -> favoriteService.getDetails(favId, (9)

new Callback<Favorite>() {

public void onSuccess(Favorite details) {

UiUtils.submitOnUiThread(() -> uiList.show(details));

}

public void onError(Throwable error) {

UiUtils.errorPopup(error);

}

}

));

}

}

public void onError(Throwable error) {

UiUtils.errorPopup(error);

}

});| 1 | 我们有基于回调的服务:一种Callback接口,该接口的方法在异步过程成功时被调用,在错误发生时被调用。. |

| 2 | 第一个服务使用收藏夹ID列表调用其回调. |

| 3 | 如果列表为空,则必须转到suggestionService. |

| 4 | 在suggestionService给出了一个List<Favorite>到第二个回调. |

| 5 | 由于我们处理的是UI,因此我们需要确保使用的代码在UI线程中运行. |

| 6 | 我们使用Java 8 Stream将处理的建议数限制为五个,并在UI的图形列表中显示它们. |

| 7 | 在每个级别,我们以相同的方式处理错误:在弹出窗口中显示它们. |

| 8 | 返回收藏夹ID级别。如果服务返回了完整列表,则需要转到favoriteService以获取详细的Favorite对象。由于我们只需要五个,因此我们首先传输ID列表以将其限制为五个. |

| 9 | 再一次,回调。这次,我们得到了一个完整的Favorite对象,我们将该对象压入UI线程中的UI. |

That is a lot of code, and it is a bit hard to follow and has repetitive parts. Consider its equivalent in Reactor:

那是很多代码,很难遵循并且包含重复的部分。考虑它在Reactor中的等效功能:

userService.getFavorites(userId) (1)

.flatMap(favoriteService::getDetails) (2)

.switchIfEmpty(suggestionService.getSuggestions()) (3)

.take(5) (4)

.publishOn(UiUtils.uiThreadScheduler()) (5)

.subscribe(uiList::show, UiUtils::errorPopup); (6)| 1 | 我们从收藏夹ID的流开始. |

| 2 | 我们将这些异步转换为详细的Favorite对象(flatMap)。现在我们有一个流程Favorite. |

| 3 | 如果的流程Favorite为空,则通过切换到后备广告 suggestionService. |

| 4 | 我们最多只对结果流中的五个元素感兴趣. |

| 5 | 最后,我们要处理UI线程中的每个数据. |

| 6 | 我们通过描述如何处理数据的最终形式(在UI列表中显示)以及发生错误的情况(显示弹出窗口)来触发流程. |

What if you want to ensure the favorite IDs are retrieved in less than 800ms or, if it

takes longer, get them from a cache? In the callback-based code, that is a complicated

task. In Reactor it becomes as easy as adding a timeout operator in the chain, as follows:

如果要确保在少于800毫秒的时间内检索喜欢的ID,或者如果花费更长的时间我们就从缓存中获取它们,该怎么办? 在基于回调的代码中,这是一项复杂的任务。在Reactor中,就像在链中添加一个timeout运算符一样容易,如下所示:

userService.getFavorites(userId)

.timeout(Duration.ofMillis(800)) (1)

.onErrorResume(cacheService.cachedFavoritesFor(userId)) (2)

.flatMap(favoriteService::getDetails) (3)

.switchIfEmpty(suggestionService.getSuggestions())

.take(5)

.publishOn(UiUtils.uiThreadScheduler())

.subscribe(uiList::show, UiUtils::errorPopup);| 1 | 如果以上部分在800ms内没有发出任何光,则传播一个错误. |

| 2 | 如果发生错误,请退回到cacheService. |

| 3 | 链的其余部分与前面的示例相似. |

Future objects are a bit better than callbacks, but they still do not do well at composition,

despite the improvements brought in Java 8 by CompletableFuture. Orchestrating multiple

Future objects together is doable but not easy. Also, Future has other problems:

Future对象比回调要好一些,但是尽管Java 8带来了改进,但它们在组合方面仍然表现不佳CompletableFuture。 Future一起编排多个 对象是可行的,但并不容易。另外,Future还有其他问题:

-

It is easy to end up with another blocking situation with

Futureobjects by calling theget()method. -

They do not support lazy computation.

-

They lack support for multiple values and advanced error handling.

-

Future通过调用该get()方法很容易导致对象的另一种阻塞情况.

-

它们不支持惰性计算.

-

他们缺乏对多个值和高级错误处理的支持.

Consider another example: We get a list of IDs from which we want to fetch a name and a

statistic and combine these pair-wise, all of it asynchronously. The following example

does so with a list of type CompletableFuture:

再看一个例子:我们得到一个ID列表,我们要从中获取一个名称和一个统计信息,并将它们成对组合,所有这些信息都是异步的。 以下示例使用类型列表进行操作CompletableFuture:

CompletableFuture combinationCompletableFuture<List<String>> ids = ifhIds(); (1)

CompletableFuture<List<String>> result = ids.thenComposeAsync(l -> { (2)

Stream<CompletableFuture<String>> zip =

l.stream().map(i -> { (3)

CompletableFuture<String> nameTask = ifhName(i); (4)

CompletableFuture<Integer> statTask = ifhStat(i); (5)

return nameTask.thenCombineAsync(statTask, (name, stat) -> "Name " + name + " has stats " + stat); (6)

});

List<CompletableFuture<String>> combinationList = zip.collect(Collectors.toList()); (7)

CompletableFuture<String>[] combinationArray = combinationList.toArray(new CompletableFuture[combinationList.size()]);

CompletableFuture<Void> allDone = CompletableFuture.allOf(combinationArray); (8)

return allDone.thenApply(v -> combinationList.stream()

.map(CompletableFuture::join) (9)

.collect(Collectors.toList()));

});

List<String> results = result.join(); (10)

assertThat(results).contains(

"Name NameJoe has stats 103",

"Name NameBart has stats 104",

"Name NameHenry has stats 105",

"Name NameNicole has stats 106",

"Name NameABSLAJNFOAJNFOANFANSF has stats 121");| 1 | 我们从一个可以为我们提供id价值清单的Future开始. |

| 2 | 一旦获得列表,我们想开始更深层次的异步处理. |

| 3 | 对于列表中的每个元素: |

| 4 | 异步获取关联名称. |

| 5 | 异步获取关联的任务. |

| 6 | 合并两个结果. |

| 7 | 现在,我们有了代表所有组合任务的期货清单。要执行这些任务,我们需要将列表转换为数组. |

| 8 | 将数组传递给CompletableFuture.allOf,在所有任务完成后输出CompletableFuture. |

| 9 | 棘手的一点是allOfreturn CompletableFuture<Void>,因此我们在期货列表上重申,通过使用收集其结果join() (此处不会阻塞,因为allOf确保了期货全部完成了). |

| 10 | 一旦整个异步管道被触发,我们等待它被处理并返回结果列表. |

Since Reactor has more combination operators out of the box, this process can be simplified, as follows:

由于Reactor提供了更多组合运算符,因此可以简化此过程,如下所示

Flux<String> ids = ifhrIds(); (1)

Flux<String> combinations =

ids.flatMap(id -> { (2)

Mono<String> nameTask = ifhrName(id); (3)

Mono<Integer> statTask = ifhrStat(id); (4)

return nameTask.zipWith(statTask, (5)

(name, stat) -> "Name " + name + " has stats " + stat);

});

Mono<List<String>> result = combinations.collectList(); (6)

List<String> results = result.block(); (7)

assertThat(results).containsExactly( (8)

"Name NameJoe has stats 103",

"Name NameBart has stats 104",

"Name NameHenry has stats 105",

"Name NameNicole has stats 106",

"Name NameABSLAJNFOAJNFOANFANSF has stats 121"

);| 1 | 这次,我们从ids(a Flux<String>)的异步提供序列开始. |

| 2 | 对于序列中的每个元素,我们(在主体flatMap调用的函数内部)异步处理两次. |

| 3 | 获取关联的名称. |

| 4 | 获取相关的统计信息. |

| 5 | 异步组合两个值. |

| 6 | 当值可用完成可用时,将值汇总到List中. |

| 7 | 在生产中,我们将Flux通过进一步组合或订阅来继续异步处理。很可能会返回result Mono。由于我们正在测试中,因此我们改为阻塞,等待处理完成,然后直接返回汇总的值列表. |

| 8 | 声明结果. |

The perils of using callbacks and Future objects are similar and are what reactive programming

addresses with the Publisher-Subscriber pair.

使用回调和Future对象的风险是相似的,这是响应式编程Publisher-Subscriber它们一起要解决的问题

3.3. From Imperative to Reactive Programming

Reactive libraries, such as Reactor, aim to address these drawbacks of “classic” asynchronous approaches on the JVM while also focusing on a few additional aspects:

反应性库(例如Reactor)旨在解决JVM上“经典”异步方法的这些缺点,同时还着重于其他一些方面:

-

Composability and readability

-

Data as a flow manipulated with a rich vocabulary of operators

-

Nothing happens until you subscribe

-

Backpressure or the ability for the consumer to signal the producer that the rate of emission is too high

-

High level but high value abstraction that is concurrency-agnostic

-

可组合性和可读性

-

以丰富的运算符词汇操纵数据流

-

subscribe之前没有任何反应

-

Backpressure 或消费者向生产者发出排放速率过高信号的能力

-

并发不可知的高级但高价值的抽象

3.3.1. Composability and Readability

By “composability”, we mean the ability to orchestrate multiple asynchronous tasks, in which we use results from previous tasks to feed input to subsequent ones. Alternatively, we can run several tasks in a fork-join style. In addition, we can reuse asynchronous tasks as discrete components in a higher-level system.

The ability to orchestrate tasks is tightly coupled to the readability and maintainability of code. As the layers of asynchronous processes increase in both number and complexity, being able to compose and read code becomes increasingly difficult. As we saw, the callback model is simple, but one of its main drawbacks is that, for complex processes, you need to have a callback executed from a callback, itself nested inside another callback, and so on. That mess is known as “Callback Hell”. As you can guess (or know from experience), such code is pretty hard to go back to and reason about.

Reactor offers rich composition options, wherein code mirrors the organization of the abstract process, and everything is generally kept at the same level (nesting is minimized).

3.3.2. The Assembly Line Analogy

You can think of data processed by a reactive application as moving through an assembly

line. Reactor is both the conveyor belt and the workstations. The raw material pours from

a source (the original Publisher) and ends up as a finished product ready to be pushed

to the consumer (or Subscriber).

您可以将反应式应用程序处理的数据视为流水线。反应堆既是传送带又是工作站。原材料从来源(原始Publisher)倾泻而出,最终成为准备好推向消费者(或Subscriber)的成品

The raw material can go through various transformations and other intermediary steps or be part of a larger assembly line that aggregates intermediate pieces together. If there is a glitch or clogging at one point (perhaps boxing the products takes a disproportionately long time), the afflicted workstation can signal upstream to limit the flow of raw material.

原材料可以经过各种转换和其他中间步骤,也可以成为将中间件聚集在一起的较大装配线的一部分。如果某一点出现故障或堵塞(也许装箱产品花费的时间过长),那么受灾的工作站可以向上游发出信号,以限制原材料的流动

3.3.3. Operators

In Reactor, operators are the workstations in our assembly analogy. Each operator adds

behavior to a Publisher and wraps the previous step’s Publisher into a new instance.

The whole chain is thus linked, such that data originates from the first Publisher and

moves down the chain, transformed by each link. Eventually, a Subscriber finishes the

process. Remember that nothing happens until a Subscriber subscribes to a Publisher,

as we see shortly.

在Reactor中,操作员是我们装配类比中的工作站。每个运算符都将行为添加到Publisher,并将上一步包装Publisher到新实例中。 因此,整个链被链接在一起,这样数据就从第一个Publisher链开始并向下移动,并由每个链接转换。 最终,Subscriber完成该过程。请记住,直到a Subscriber订阅了Publisher,什么都不会发生,正如我们很快看到的那样。

| Understanding that operators create new instances can help you avoid a common mistake that would lead you to believe that an operator you used in your chain is not being applied. See this item in the FAQ. |

While the Reactive Streams specification does not specify operators at all, one of the best added values of reactive libraries, such as Reactor, is the rich vocabulary of operators that they provide. These cover a lot of ground, from simple transformation and filtering to complex orchestration and error handling.

虽然反应式流规范根本没有指定运算符, 但是反应式库的最佳附加值之一(例如Reactor)是它们提供的运算符的丰富词汇表。 从简单的转换和过滤到复杂的编排和错误处理,这些内容涉及很多领域

3.3.4. Nothing Happens Until You subscribe()

In Reactor, when you write a Publisher chain, data does not start pumping into it by

default. Instead, you create an abstract description of your asynchronous process (which

can help with reusability and composition).

在Reactor中,当您编写Publisher链时,默认情况下不会开始将数据泵入链中。 相反,您可以创建异步过程的抽象描述(这有助于重用和组合)。

By the act of subscribing, you tie the Publisher to a Subscriber, which triggers

the flow of data in the whole chain. This is achieved internally by a single request

signal from the Subscriber that is propagated upstream, all the way back to the source

Publisher.

通过subscribing操作,您可以将绑定Publisher到Subscriber,从而触发整个链中的数据流。 这是通过request 来自的信号在内部实现的,该信号在Subscriber上游传播,一直传回到信号源 Publisher。

3.3.5. Backpressure

Propagating signals upstream is also used to implement backpressure, which we described in the assembly line analogy as a feedback signal sent up the line when a workstation processes more slowly than an upstream workstation.

上游传播的信号也用于实现背压,我们在组装流水线中将其描述为当工作站的处理速度比上游工作站慢时,沿生产线向上发送的反馈信号。

The real mechanism defined by the Reactive Streams specification is pretty close to the

analogy: A subscriber can work in unbounded mode and let the source push all the data

at its fastest achievable rate or it can use the request mechanism to signal the source

that it is ready to process at most n elements.

Reactive Streams规范定义的实际机制与类推非常接近: subscriber 可以以无界模式工作,并让源以最快可达到的速率推送所有数据,或者可以使用该request机制向源发出已准备就绪的信号处理最多的n元素。

Intermediate operators can also change the request in-transit. Imagine a buffer

operator that groups elements in batches of ten. If the subscriber requests one buffer, it

is acceptable for the source to produce ten elements. Some operators also implement

prefetching strategies, which avoid request(1) round-trips and is beneficial

if producing the elements before they are requested is not too costly.

中间的Intermediate还可以在途中更改请求。想象一下,一个buffer 运算符将元素以十个为一组进行分组。 如果subscriber请求一个缓冲区,则源产生十个元素是可以接受的。 一些operators还实施了 预取策略,该策略可避免request(1)往返,并且如果在请求之前生产元素的成本不太高的话,则是有益的。

This transforms the push model into a push-pull hybrid, where the downstream can pull n elements from upstream if they are readily available. But if the elements are not ready, they get pushed by the upstream whenever they are produced.

这会将推模型转换为推拉混合模型,如果下游可以随时使用,则下游可以从上游拉取n个元素。 但是,如果元素尚未准备就绪,则每当它们被生产时就会被上游推出。

3.3.6. Hot vs Cold

The Rx family of reactive libraries distinguishes two broad categories of reactive sequences: hot and cold. This distinction mainly has to do with how the reactive stream reacts to subscribers:

Rx反应库的家族将反应序列分为两大类:热和冷。这种区别主要与反应流对订户的反应有关:

-

A Cold sequence starts anew for each

Subscriber, including at the source of data. For example, if the source wraps an HTTP call, a new HTTP request is made for each subscription. -

A Hot sequence does not start from scratch for each

Subscriber. Rather, late subscribers receive signals emitted after they subscribed. Note, however, that some hot reactive streams can cache or replay the history of emissions totally or partially. From a general perspective, a hot sequence can even emit when no subscriber is listening (an exception to the “nothing happens before you subscribe” rule). -

每一个冷序列都重新开始Subscriber,包括在数据源处。例如,如果源包装了一个HTTP调用,则会为每个订阅发出一个新的HTTP请求.

-

每个Hot序列都不是从头开始的Subscriber。相反,后期用户接收发出的信号后,他们认购。但是请注意,某些热反应流可以全部或部分缓存或重放排放历史。从一般的角度来看,即使没有订阅者在收听,热序列甚至会发出(“订阅之前什么也没有发生”规则的例外).

For more information on hot vs cold in the context of Reactor, see this reactor-specific section.

4. Reactor Core Features

The Reactor project main artifact is reactor-core, a reactive library that focuses on

the Reactive Streams specification and targets Java 8.

Reactor项目的主要工件是reactor-core一个反应式库,该库侧重于Reactive Streams规范,并针对Java 8。

Reactor introduces composable reactive types that implement Publisher but also provide

a rich vocabulary of operators: Flux and Mono. A Flux object

represents a reactive sequence of 0..N items, while a Mono object represents a

single-value-or-empty (0..1) result.

Reactor引入了可实现的可反应类型,这些可实现Publisher但也提供了丰富的运算符词汇:Flux和Mono。 Flux对象表示的0..N项的反应序列,而一个Mono对象表示单值或空(0..1)的结果。

This distinction carries a bit of semantic information into the type, indicating the

rough cardinality of the asynchronous processing. For instance, an HTTP request produces

only one response, so there is not much sense in doing a count operation. Expressing

the result of such an HTTP call as a Mono<HttpResponse> thus makes more sense than

expressing it as a Flux<HttpResponse>, as it offers only operators that are relevant to

a context of zero items or one item.

这种区别在类型中包含了一些语义信息,表明了异步处理的粗略基数。 例如,一个HTTP请求仅产生一个响应,因此进行count操作没有太大意义。 因此,将HTTP调用的结果表示为 Mono<HttpResponse>比将其表示为Flux<HttpResponse>更有意义,因为它仅提供与零项或一个项的上下文相关的运算符。

Operators that change the maximum cardinality of the processing also switch to the

relevant type. For instance, the count operator exists in Flux, but it returns a

Mono<Long>.

更改处理最大基数的运算符也将切换到相关类型。例如,count运算符存在于中Flux,但返回 Mono<Long>。

4.1. Flux, an Asynchronous Sequence of 0-N Items

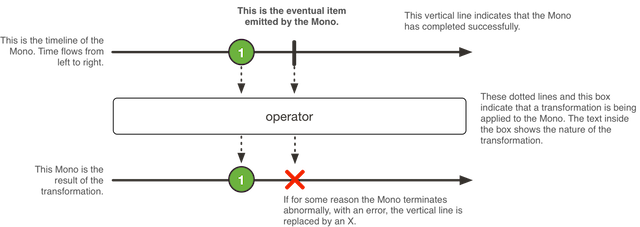

The following image shows how a Flux transforms items:

A Flux<T> is a standard Publisher<T> that represents an asynchronous sequence of 0 to N

emitted items, optionally terminated by either a completion signal or an error.

As in the Reactive Streams spec, these three types of signal translate to calls to a downstream

Subscriber’s onNext, onComplete, and onError methods.

Flux<T>是Publisher<T>代表0到N个发射项目的异步序列的标准,可以选择通过完成信号或错误终止。 如反应式流规范中,这三种类型的信号转换为呼叫到下游Subscriber的onNext,onComplete和onError方法。

With this large scope of possible signals, Flux is the general-purpose reactive type.

Note that all events, even terminating ones, are optional: no onNext event but an

onComplete event represents an empty finite sequence, but remove the onComplete and

you have an infinite empty sequence (not particularly useful, except for tests around cancellation).

Similarly, infinite sequences are not necessarily empty. For example, Flux.interval(Duration)

produces a Flux<Long> that is infinite and emits regular ticks from a clock.

在这么大范围的可能信号中,Flux是通用信号类型。 请注意,所有事件,甚至是终止事件,都是可选的:没有onNext事件,但一个 onComplete事件表示一个空的有限序列,但是删除onComplete,您将获得一个无限的空序列(除了取消测试外,它不是特别有用)。 同样,无限序列不一定为空。例如,Flux.interval(Duration) 产生一个Flux<Long>无限的并且从时钟发出规则的滴答声。

4.2. Mono, an Asynchronous 0-1 Result

The following image shows how a Mono transforms an item:

A Mono<T> is a specialized Publisher<T> that emits at most one item and then

(optionally) terminates with an onComplete signal or an onError signal.

Mono<T>是一个专门的Publisher<T>,最多发出一个项目, 然后(可选)以一个onComplete信号或一个onError信号终止。

It offers only a subset of the operators that are available for a Flux, and

some operators (notably those that combine the Mono with another Publisher)

switch to a Flux.

For example, Mono#concatWith(Publisher) returns a Flux while Mono#then(Mono)

returns another Mono.

它仅提供可用于Flux的运算符的一个子集,而某些运算符(特别是那些Mono与另一个结合的运算符Publisher)切换到Flux。 例如,Mono#concatWith(Publisher)返回Flux一会儿Mono#then(Mono) 返回另一个Mono。

Note that you can use a Mono to represent no-value asynchronous processes that only

have the concept of completion (similar to a Runnable). To create one, you can use an empty

Mono<Void>.

请注意,您可以使用 Mono表示仅具有完成概念的无值异步流程(类似于Runnable)。要创建一个,您可以使用empty Mono<Void>。

4.3. Simple Ways to Create a Flux or Mono and Subscribe to It

The easiest way to get started with Flux and Mono is to use one of the numerous

factory methods found in their respective classes.

最简单的方式开始使用Flux和Mono是使用在各自的类别中的众多工厂方法之一。

For instance, to create a sequence of String, you can either enumerate them or put them

in a collection and create the Flux from it, as follows:

例如,要创建的序列String,您可以枚举它们或将它们放入集合中并从中创建Flux,如下所示:

Flux<String> seq1 = Flux.just("foo", "bar", "foobar");

List<String> iterable = Arrays.asList("foo", "bar", "foobar");

Flux<String> seq2 = Flux.fromIterable(iterable);Other examples of factory methods include the following:

Mono<String> noData = Mono.empty(); (1)

Mono<String> data = Mono.just("foo");

Flux<Integer> numbersFromFiveToSeven = Flux.range(5, 3); (2)| 1 | 请注意,即使工厂方法没有任何价值,它也会尊重该泛型类型. |

| 2 | 第一个参数是范围的开始,而第二个参数是要生成的项目数. |

When it comes to subscribing, Flux and Mono make use of Java 8 lambdas. You

have a wide choice of .subscribe() variants that take lambdas for different

combinations of callbacks, as shown in the following method signatures:

当谈到订阅,Flux以及Mono 采用Java 8 lambda表达式。 您可以选择多种.subscribe()类型的变体,它们针对不同的回调组合采用lambda表达式,如以下方法签名所示:

Fluxsubscribe(); (1)

subscribe(Consumer<? super T> consumer); (2)

subscribe(Consumer<? super T> consumer,

Consumer<? super Throwable> errorConsumer); (3)

subscribe(Consumer<? super T> consumer,

Consumer<? super Throwable> errorConsumer,

Runnable completeConsumer); (4)

subscribe(Consumer<? super T> consumer,

Consumer<? super Throwable> errorConsumer,

Runnable completeConsumer,

Consumer<? super Subscription> subscriptionConsumer); (5)| 1 | 订阅并触发序列. |

| 2 | 为每个产生的值做点事. |

| 3 | 处理值,但也会对错误做出反应. |

| 4 | 处理值和错误,但在序列成功完成后还要运行一些代码. |

| 5 | 处理值和错误并成功完成,但还要处理因subscribe调用产生的结果Subscription. |

These variants return a reference to the subscription that you can use to cancel the

subscription when no more data is needed. Upon cancellation, the source should stop

producing values and clean up any resources it created. This cancel-and-clean-up behavior

is represented in Reactor by the general-purpose Disposable interface.

|

| 这些变体返回对订阅的引用,您可以在不需要更多数据时使用该引用来取消订阅。 取消后,源应停止产生值并清除其创建的任何资源。这种取消和清理行为在Reactor中由通用Disposable接口表示。 |

4.3.1. subscribe Method Examples

This section contains minimal examples of each of the five signatures for the subscribe

method. The following code shows an example of the basic method with no arguments:

本节包含该subscribe 方法的五个签名中每个签名的最少示例。以下代码显示了不带参数的基本方法的示例:

Flux<Integer> ints = Flux.range(1, 3); (1)

ints.subscribe(); (2)| 1 | 设置一个Flux在订阅者加入时产生三个值的。以最简单的方式订阅. |

| 2 | 以最简单的方式订阅. |

The preceding code produces no visible output, but it does work. The Flux produces

three values. If we provide a lambda, we can make the values visible. The next example

for the subscribe method shows one way to make the values appear:

前面的代码没有产生可见的输出,但是它确实起作用。将Flux产生三个值。如果提供lambda,则可以使值可见。 该subscribe方法的下一个示例显示了一种使值出现的方法

Flux<Integer> ints = Flux.range(1, 3); (1)

ints.subscribe(i -> System.out.println(i)); (2)| 1 | 设置一个Flux在订阅者加入时产生三个值的. |

| 2 | 用将打印值的订阅者订阅. |

The preceding code produces the following output:

1

2

3To demonstrate the next signature, we intentionally introduce an error, as shown in the following example:

为了演示下一个签名,我们有意引入一个错误,如以下示例所示

Flux<Integer> ints = Flux.range(1, 4) (1)

.map(i -> { (2)

if (i <= 3) return i; (3)

throw new RuntimeException("Got to 4"); (4)

});

ints.subscribe(i -> System.out.println(i), (5)

error -> System.err.println("Error: " + error));| 1 | 设置一个Flux,当订阅者连接时它会产生四个值. |

| 2 | 我们需要一个map,以便我们可以不同地处理某些值. |

| 3 | 对于大多数值,请返回该值. |

| 4 | 对于一个值,强制执行错误. |

| 5 | 用包含错误处理程序的订阅者进行Subscribe. |

We now have two lambda expressions: one for the content we expect and one for errors. The preceding code produces the following output:

现在,我们有两个lambda表达式:一个用于期望的内容,另一个用于错误。上面的代码产生以下输出:

1

2

3

Error: java.lang.RuntimeException: Got to 4The next signature of the subscribe method includes both an error handler and

a handler for completion events, as shown in the following example:

该subscribe方法的下一个签名包括错误处理程序和完成事件处理程序,如以下示例所示:

Flux<Integer> ints = Flux.range(1, 4); (1)

ints.subscribe(i -> System.out.println(i),

error -> System.err.println("Error " + error),

() -> System.out.println("Done")); (2)| 1 | 设置一个Flux,当订阅者连接时它会产生四个值. |

| 2 | 使用包括完成事件处理程序的订阅服务器进行订阅. |

Error signals and completion signals are both terminal events and are exclusive of one another (you never get both). To make the completion consumer work, we must take care not to trigger an error.

错误信号和完成信号都是终端事件,并且彼此互斥(您永远不会都得到)。为了使完成消费者工作,我们必须注意不要触发错误

The completion callback has no input, as represented by an empty pair of

parentheses: It matches the run method in the Runnable interface. The preceding code

produces the following output:

完成回调没有输入,由一对空括号表示:它与接口中的run方法匹配Runnable。上面的代码产生以下输出:

1

2

3

4

DoneThe last signature of the subscribe method includes a Consumer<Subscription>.

该subscribe方法的最后一个签名包括Consumer<Subscription>。

That variant requires you to do something with the Subscription (perform a

request(long) on it or cancel() it). Otherwise the Flux hangs.

|

| 该变体要求您对Subscription进行某些操作(request(long)或 cancel())。否则Flux会挂起。 |

The following example shows the last signature of the subscribe method:

Flux<Integer> ints = Flux.range(1, 4);

ints.subscribe(i -> System.out.println(i),

error -> System.err.println("Error " + error),

() -> System.out.println("Done"),

sub -> sub.request(10)); (1)| 1 | 当我们订阅时,我们会收到Subscription。表示我们要从源头获取 10 个元素(实际上将发出4个元素并完成). |

4.3.2. Cancelling a subscribe() with Its Disposable

All these lambda-based variants of subscribe() have a Disposable return type.

In this case, the Disposable interface represents the fact that the subscription

can be cancelled, by calling its dispose() method.

所有这些基于lambda的变体subscribe()都具有Disposable返回类型。 在这种情况下,该Disposable接口表示可以实现通过调用方法dispose()来取消订阅。

For a Flux or Mono, cancellation is a signal that the source should stop

producing elements. However, it is NOT guaranteed to be immediate: Some sources

might produce elements so fast that they could complete even before receiving the

cancel instruction.

对于Flux或Mono,取消表示信号源应停止产生元素。 但是,并不能保证立即执行:某些源可能会产生如此快的元素,以至于甚至在接收到取消指令之前它们也可以完成。

Some utilities around Disposable are available in the Disposables class.

Among these, Disposables.swap() creates a Disposable wrapper that lets

you atomically cancel and replace a concrete Disposable. This can be useful,

for instance, in a UI scenario where you want to cancel a request and replace it

with a new one whenever the user clicks on a button. Disposing the wrapper itself

closes it. Doing so disposes the current concrete value and all future attempted replacements.

在Disposable类中提供了一些实用程序。 在其中,Disposables.swap()创建一个Disposable包装器,使您可以自动取消和替换具体的Disposable。 例如,这在UI场景中很有用,在UI场景中,您希望在用户单击按钮时取消请求并将其替换为新请求。 包装器本身会将其自动关闭。这样做会处置当前的具体的值以及将来所有尝试的替代品。

Another interesting utility is Disposables.composite(…). This composite

lets you collect several Disposable — for instance, multiple in-flight requests

associated with a service call — and dispose all of them at once later on.

Once the composite’s dispose() method has been called, any attempt to add

another Disposable immediately disposes it.

另一个有趣的实用程序是Disposables.composite(…)。通过此组合,您可以收集多个Disposable (例如,与服务调用关联的多个进行中的请求),并在以后一次处理所有这些请求。 一旦复合方法调用了dispose(),任何添加其他Disposable方法的尝试都会立即丢弃掉。

4.3.3. An Alternative to Lambdas: BaseSubscriber

There is an additional subscribe method that is more generic and takes a full-blown

Subscriber rather than composing one out of lambdas. In order to help with writing

such a Subscriber, we provide an extendable class called BaseSubscriber.

还有一种subscribe更通用的方法,它采用成熟的方法, 使用Subscriber而不是用lambda组成一个方法。 为了帮助编写这样的 Subscriber,我们提供了一个称为的可扩展的类叫 BaseSubscriber。

Instances of BaseSubscriber (or subclasses of it) are single-use,

meaning that a BaseSubscriber cancels its subscription to the first Publisher if it

is subscribed to a second Publisher.

That is because using an instance twice would violate the Reactive Streams rule that a

the onNext method of a Subscriber must not be called in parallel.

As a result, anonymous implementations are fine only if they are declared directly within

the call to Publisher#subscribe(Subscriber).

|

| BaseSubscriber(或它的子类)的 实例是一次性的,这意味着如果一个 BaseSubscriber 对第二个 Publisher 就行订阅,则它会取消其对第一个的Publisher的订阅。 那是因为两次使用一个实例会违反反应式流规则,即不能并行调用Subscriber的onNext方法。 因此,只有在对的调用中直接声明了匿名实现时,匿名实现才可以Publisher#subscribe(Subscriber)。 |

Now we can implement one of these. We call it a SampleSubscriber. The following

example shows how it would be attached to a Flux:

现在我们可以实现其中之一。我们称它为SampleSubscriber。以下示例显示了如何将其附加到Flux:

SampleSubscriber<Integer> ss = new SampleSubscriber<Integer>();

Flux<Integer> ints = Flux.range(1, 4);

ints.subscribe(i -> System.out.println(i),

error -> System.err.println("Error " + error),

() -> {System.out.println("Done");},

s -> s.request(10));

ints.subscribe(ss);The following example shows what SampleSubscriber could look like, as a minimalistic

implementation of a BaseSubscriber:

以下示例显示了SampleSubscriber作为一个BaseSubscriber的简约实现:

package io.projectreactor.samples;

import org.reactivestreams.Subscription;

import reactor.core.publisher.BaseSubscriber;

public class SampleSubscriber<T> extends BaseSubscriber<T> {

public void hookOnSubscribe(Subscription subscription) {

System.out.println("Subscribed");

request(1);

}

public void hookOnNext(T value) {

System.out.println(value);

request(1);

}

}The SampleSubscriber class extends BaseSubscriber, which is the recommended abstract

class for user-defined Subscribers in Reactor. The class offers hooks that can be

overridden to tune the subscriber’s behavior. By default, it triggers an unbounded

request and behaves exactly as subscribe(). However, extending BaseSubscriber is

much more useful when you want a custom request amount.

这SampleSubscriber类是扩展BaseSubscriber,在Reactor中,推荐使用Subscribers的抽象类SampleSubscriber,去实现自定义功能。 该类提供了可以被覆盖的hook,以调整订阅者的行为。默认情况下,它会触发一个无限制的请求,并且行为与完全相同subscribe()。 但是,当您需要自定义请求量时,BaseSubscriber的扩展功能会更加有用。

For a custom request amount, the bare minimum is to implement hookOnSubscribe(Subscription subscription)

and hookOnNext(T value), as we did. In our case, the hookOnSubscribe method

prints a statement to standard out and makes the first request. Then the hookOnNext

method prints a statement and performs additional requests, one request

at a time.

对于自定义请求量,最起码的是实施hookOnSubscribe(Subscription subscription) 和hookOnNext(T value),就像我们所做的那样。 在我们的例子中,该hookOnSubscribe方法输出标准声明并发出第一个请求。然后,该hookOnNext 方法打印一条语句并执行其他请求,一次执行一个请求。

The SampleSubscriber class produces the following output:

Subscribed

1

2

3

4BaseSubscriber also offers a requestUnbounded() method to switch to unbounded mode

(equivalent to request(Long.MAX_VALUE)), as well as a cancel() method.

BaseSubscriber还提供了requestUnbounded()一种切换到无界模式的方法(等效于request(Long.MAX_VALUE)),以及一种cancel()方法。

It also has additional hooks: hookOnComplete, hookOnError, hookOnCancel, and hookFinally

(which is always called when the sequence terminates, with the type of termination passed

in as a SignalType parameter)

它还具有另外的钩:hookOnComplete,hookOnError,hookOnCancel,和hookFinally (其总是在序列终止时调用,并在序列通过后,传入类型为SignalType的参数)

You almost certainly want to implement the hookOnError, hookOnCancel, and

hookOnComplete methods. You may also want to implement the hookFinally method.

SampleSubscribe is the absolute minimum implementation of a Subscriber that performs

bounded requests.

|

| 你几乎可以肯定要实现的hookOnError,hookOnCancel和 hookOnComplete方法。 您可能还想实现该hookFinally方法。 SampleSubscribe是Subscriber 执行受限请求的的绝对最小的实现。 |

4.3.4. On Backpressure and Ways to Reshape Requests

When implementing backpressure in Reactor, the way consumer pressure is propagated back to the source is by sending a request to the upstream operator.

The sum of current requests is sometimes referenced to as the current “demand”, or “pending request”.

Demand is capped at Long.MAX_VALUE, representing an unbounded request (meaning “produce as fast as you can” — basically disabling backpressure).

在Reactor中实施背压时,通过将压力发送request给上游操作员,将用户压力传播回源。 当前请求的总和有时被称为当前“需求”或“待处理请求”。 需求上限为Long.MAX_VALUE,表示无限制的请求(意思是“尽可能快地生成”,基本上是禁止背压)。

The first request comes from the final subscriber at subscription time,

yet the most direct ways of subscribing all immediately trigger an unbounded request of Long.MAX_VALUE:

第一个请求在订阅时来自最终订户,但是最直接的订阅方式立即触发了以下无限请求Long.MAX_VALUE:

-

subscribe()and most of its lambda-based variants (to the exception of the one that has a Consumer<Subscription>) -

block(),blockFirst()andblockLast() -

iterating over a

toIterable()ortoStream() -

subscribe() 及其大多数基于lambda的变体(具有Consumer <Subscription>的变体除外)

-

block(),blockFirst()和blockLast()

-

遍历在一个toIterable()或toStream()

The simplest way of customizing the original request is to subscribe with a BaseSubscriber with the hookOnSubscribe method overridden, as the following example shows:

定制原始请求的最简单方法是subscribe使用BaseSubscriber,其中hookOnSubscribe方法被重写,如以下示例所示:

Flux.range(1, 10)

.doOnRequest(r -> System.out.println("request of " + r))

.subscribe(new BaseSubscriber<Integer>() {

@Override

public void hookOnSubscribe(Subscription subscription) {

request(1);

}

@Override

public void hookOnNext(Integer integer) {

System.out.println("Cancelling after having received " + integer);

cancel();

}

});The preceding snippet prints out the following:

request of 1

Cancelling after having received 1

When manipulating a request, you must be careful to produce enough demand for

the sequence to advance, or your Flux can get “stuck”. That is why BaseSubscriber

defaults to an unbounded request in hookOnSubscribe. When overriding this hook, you should usually

call request at least once.

|

| 在处理请求时,您必须小心以产生足够的需求来推进序列,否则您的Flux可能会“卡住”。 这就是为什么BaseSubscriber中的hookOnSubscribe 默认为无限制请求。覆盖此钩子时,通常应request至少调用一次。 |

Operators that Change the Demand from Downstream

One thing to keep in mind is that demand expressed at the subscribe level can be reshaped by each operator in the upstream chain.

A textbook case is the buffer(N) operator: If it receives a request(2), it is interpreted as a demand for two full buffers.

As a consequence, since buffers need N elements to be considered full, the buffer operator reshapes the request to 2 x N.

要记住的一件事是,上游链中的每个operator都可以调整在订阅级别表达的需求。 教科书的情况是buffer(N)运算符:如果它收到 request(2),则解释为对两个完整缓冲区的需求。 结果,由于缓冲区需要将N元素视为已满,因此buffer运算符将请求调整为2 x N。

You might also have noticed that some operators have variants that take an int input parameter called prefetch.

This is another category of operators that modify the downstream request.

These are usually operators that deal with inner sequences, deriving a Publisher from each incoming element (like flatMap).

您可能还已经注意到,某些运算符的变体采用了int称为的输入参数prefetch。这是修改下游请求的另一类运算符。 这些通常是处理内部序列的运算符,它们Publisher从每个传入元素(如flatMap)派生一个。

Prefetch is a way to tune the initial request made on these inner sequences.

If unspecified, most of these operators start with a demand of 32.

Prefetch是一种调整对这些内部序列发出的初始请求的方法。如果未指定,则大多数这些运算符的起始要求为32。

These operators usually also implement a replenishing optimization: Once the operator has seen 75% of the prefetch request fulfilled, it re-requests 75% from upstream. This is a heuristic optimization made so that these operators proactively anticipate the upcoming requests.

这些操作员通常还会实现补充优化:一旦操作员看到预取请求的75%已完成,它就会从上游重新请求75%。 进行启发式优化,以便这些操作员主动预测即将到来的请求。

Finally, a couple of operators let you directly tune the request: limitRate and limitRequest.

最后,几个运算符可让您直接调整请求:limitRate和limitRequest。

limitRate(N) splits the downstream requests so that they are propagated upstream in smaller batches.

For instance, a request of 100 made to limitRate(10) would result in, at most, 10 requests of 10 being propagated to the upstream.

Note that, in this form, limitRate actually implements the replenishing optimization discussed earlier.

limitRate(N)拆分下游请求,以便将它们以较小的批次传播到上游。例如,一个请求100到由limitRate(10)将导致,顶多10的请求10传播到上游。 注意,以这种形式,limitRate实际上实现了前面讨论的补充优化。

The operator has a variant that also lets you tune the replenishing amount (referred to as the lowTide in the variant): limitRate(highTide, lowTide).

Choosing a lowTide of 0 results in strict batches of highTide requests, instead of batches further reworked by the replenishing strategy.

经营者有一个变种,也可以让你调整补充量(简称lowTide中变体): limitRate(highTide, lowTide)。 选择一个 lowTide 参数0 会导致严格的批次highTide请求,而不是通过补充策略进一步重做的批次。

limitRequest(N), on the other hand, caps the downstream request to a maximum total demand.

It adds up requests up to N. If a single request does not make the total demand overflow over N, that particular request is wholly propagated upstream.

After that amount has been emitted by the source, limitRequest considers the sequence complete, sends an onComplete signal downstream, and cancels the source.

limitRequest(N),另一方面,限额了下游请求的最大总需求。它将请求总计为N。如果单个请求request没有使总需求超出N,则该特定请求将完全向上游传播。 在源头发出该数量的信号后,limitRequest认为序列已完成,向下游发送onComplete信号,然后取消源。

4.4. Programmatically creating a sequence

In this section, we introduce the creation of a Flux or a Mono by

programmatically defining its associated events (onNext, onError, and

onComplete). All these methods share the fact that they expose an API to

trigger the events that we call a sink. There are actually a few sink

variants, which we’ll get to shortly.

在本节中,我们介绍的创建Flux或Mono通过编程方式定义及其相关事件(onNext,onError,和 onComplete)。 所有这些方法都满足相同的设计:它们公开一个API来触发我们称为接收器的事件。实际上有一些接收器变体,稍后我们将介绍。

4.4.1. Synchronous generate

The simplest form of programmatic creation of a Flux is through the generate

method, which takes a generator function.

以编程方式创建“ Flux”的最简单形式是通过“ generate”方法,通过使用生成器功能。

This is for synchronous and one-by-one emissions, meaning that

the sink is a SynchronousSink and that its next() method can only be called

at most once per callback invocation. You can then additionally call error(Throwable)

or complete(), but this is optional.

这用于*同步发射*和*一对一*发射,这意味着接收器为 SynchronousSink,并且其next()方法最多只能在每次回调调用时调用一次。 然后,您可以另外调用error(Throwable) 或complete(),但这是可选的。

The most useful variant is probably the one that also lets you keep a state

that you can refer to in your sink usage to decide what to emit next. The generator

function then becomes a BiFunction<S, SynchronousSink<T>, S>, with <S> the

type of the state object. You have to provide a Supplier<S> for the initial

state, and your generator function now returns a new state on each round.

最有用的变体可能是一种变体,它还可以让您保持在接收器使用中可以参考的状态,以决定接下来要发射什么。 然后,生成器函数变为BiFunction<S, SynchronousSink<T>, S>带有<S>状态对象类型的。 您必须Supplier<S>为初始状态提供一个,并且生成器函数现在在每个回合中都返回一个新状态。

For instance, you could use an int as the state:

generateFlux<String> flux = Flux.generate(

() -> 0, (1)

(state, sink) -> {

sink.next("3 x " + state + " = " + 3*state); (2)

if (state == 10) sink.complete(); (3)

return state + 1; (4)

});| 1 | 我们提供初始状态值0 |

| 2 | 我们使用状态来选择要发出的信号(乘法表3中的一行). |

| 3 | 我们还使用它来选择何时停止 |

| 4 | 我们返回在下一个调用中使用的新状态(除非序列在此调用中终止). |

The preceding code generates the table of 3, as the following sequence:

3 x 0 = 0 3 x 1 = 3 3 x 2 = 6 3 x 3 = 9 3 x 4 = 12 3 x 5 = 15 3 x 6 = 18 3 x 7 = 21 3 x 8 = 24 3 x 9 = 27 3 x 10 = 30

You can also use a mutable <S>. The example above could for instance be

rewritten using a single AtomicLong as the state, mutating it on each round:

您也可以使用可变的<S>。例如,上面的示例可以使用一个AtomicLong状态作为重写状态,在每个回合中对其进行更改

Flux<String> flux = Flux.generate(

AtomicLong::new, (1)

(state, sink) -> {

long i = state.getAndIncrement(); (2)

sink.next("3 x " + i + " = " + 3*i);

if (i == 10) sink.complete();

return state; (3)

});| 1 | 这次,我们生成一个可变对象作为状态. |

| 2 | 我们在这里改变状态. |

| 3 | 我们返回与新状态相同的实例. |

If your state object needs to clean up some resources, use the

generate(Supplier<S>, BiFunction, Consumer<S>) variant to clean up the last

state instance.

|

| 如果您的状态对象需要清理一些资源,请使用 generate(Supplier<S>, BiFunction, Consumer<S>)变体来清理最后一个状态实例 |

The following example uses the generate method that includes a Consumer:

Flux<String> flux = Flux.generate(

AtomicLong::new,

(state, sink) -> { (1)

long i = state.getAndIncrement(); (2)

sink.next("3 x " + i + " = " + 3*i);

if (i == 10) sink.complete();

return state; (3)

}, (state) -> System.out.println("state: " + state)); (4)

}| 1 | 同样,我们生成一个可变对象作为状态. |

| 2 | 我们在这里改变状态. |

| 3 | 我们返回与新状态相同的实例. |

| 4 | 我们将最后一个状态值(11)视为此Consumerlambda 的输出. |

In the case of the state containing a database connection or other resource

that needs to be handled at the end of the process, the Consumer lambda could

close the connection or otherwise handle any tasks that should be done at the

end of the process.

如果状态包含在过程结束时需要处理的数据库连接或其他资源,则Consumer lambda可以关闭连接或以其他方式处理应在过程结束时完成的任何任务

4.4.2. Asynchronous and Multi-threaded: create

create is a more advanced form of programmatic creation of a Flux which is

suitable for multiple emissions per round, even from multiple threads.

create是Flux内的一种更高级的程序化创建形式,适用于每轮多次排放,甚至来自多个线程。

It exposes a FluxSink, with its next, error, and complete methods.

Contrary to generate, it doesn’t have a state-based variant. On the other

hand, it can trigger multi-threaded events in the callback.

它暴露了FluxSink,与它的next,error和complete方法。与此相反generate,它没有基于状态的变体。另一方面,它可以触发回调中的多线程事件。

create can be very useful to bridge an existing API with the reactive

world - such as an asynchronous API based on listeners.

|

| create 将现有的API与响应世界联系起来非常有用-例如基于侦听器的异步API。 |

create doesn’t parallelize your code nor does it make it asynchronous, even

though it can be used with asynchronous APIs. If you block within the create lambda,

you expose yourself to deadlocks and similar side effects. Even with the use of subscribeOn,

there’s the caveat that a long-blocking create lambda (such as an infinite loop calling

sink.next(t)) can lock the pipeline: the requests would never be performed due to the

loop starving the same thread they are supposed to run from. Use the subscribeOn(Scheduler, false)

variant: requestOnSeparateThread = false will use the Scheduler thread for the create

and still let data flow by performing request in the original thread.

|

| create 即使它可以与异步API一起使用,也不会并行化您的代码,也不会使其异步。 如果您在createlambda中阻塞,则会使自己陷入僵局和类似的副作用。 即使使用subscribeOn,也有一个警告,即长阻塞的create lambda(例如无限循环调用 sink.next(t))可以锁定管道:由于循环会饿死它们应该从中运行的相同线程,因此将永远不会执行请求。 使用subscribeOn(Scheduler, false) 变体:requestOnSeparateThread = false将Scheduler线程用于,create 并仍然通过request在原始线程中执行来让数据流动。 |

Imagine that you use a listener-based API. It processes data by chunks

and has two events: (1) a chunk of data is ready and (2) the processing is

complete (terminal event), as represented in the MyEventListener interface:

假设您使用基于侦听器的API。 它按块处理数据并有两个事件:(1)数据块已准备就绪,并且(2)处理完成(终端事件),如MyEventListener接口所示:

interface MyEventListener<T> {

void onDataChunk(List<T> chunk);

void processComplete();

}You can use create to bridge this into a Flux<T>:

Flux<String> bridge = Flux.create(sink -> {

myEventProcessor.register( (4)

new MyEventListener<String>() { (1)

public void onDataChunk(List<String> chunk) {

for(String s : chunk) {

sink.next(s); (2)

}

}

public void processComplete() {

sink.complete(); (3)

}

});

});| 1 | 桥接到MyEventListenerAPI |

| 2 | 块中的每个元素都成为中的一个元素Flux. |

| 3 | 该processComplete事件已翻译为onComplete. |

| 4 | 每当myEventProcessor执行时,所有这些操作都是异步完成的. |

Additionally, since create can bridge asynchronous APIs and manages backpressure, you

can refine how to behave backpressure-wise, by indicating an OverflowStrategy:

此外,由于create可以桥接异步API并管理背压,因此您可以通过指示以下内容来优化如何进行背压行为OverflowStrategy:

-

IGNOREto Completely ignore downstream backpressure requests. This may yieldIllegalStateExceptionwhen queues get full downstream. -

ERRORto signal anIllegalStateExceptionwhen the downstream can’t keep up. -

DROPto drop the incoming signal if the downstream is not ready to receive it. -

LATESTto let downstream only get the latest signals from upstream. -

BUFFER(the default) to buffer all signals if the downstream can’t keep up. (this does unbounded buffering and may lead toOutOfMemoryError). -

IGNORE完全忽略下游背压请求。IllegalStateException当队列下游充满时,可能会产生这种情况.

-

ERROR 去发送一个 IllegalStateException信号,当下游无法跟上时发出信号.

-

DROP 如果下游尚未准备好接收信号,则丢弃该信号.

-

LATEST 让下游只从上游获取最新信号.

-

BUFFER(默认设置)以在下游无法跟上时缓冲所有信号。(这会实现无限缓冲,并可能导致OutOfMemoryError).

Mono also has a create generator. The MonoSink of Mono’s create

doesn’t allow several emissions. It will drop all signals after the first one.

|

| Mono也有一个create创造器。在MonoSink的创造方法中不允许几个发射。它将在第一个信号之后丢弃所有信号 |

4.4.3. Asynchronous but single-threaded: push

push is a middle ground between generate and create which is suitable for

processing events from a single producer. It is similar to create in the sense

that it can also be asynchronous and can manage backpressure using any of the

overflow strategies supported by create. However, only one producing thread

may invoke next, complete or error at a time.

push是之间的中间接地generate并且create其适用于从一个生产者处理事件。从create某种意义上讲,它类似于,它也可以是异步的,并且可以使用所支持的任何溢出策略来管理背压create。 但是,只有一个线程生产 可以调用next,complete或error在同一时间。

Flux<String> bridge = Flux.push(sink -> {

myEventProcessor.register(

new SingleThreadEventListener<String>() { (1)

public void onDataChunk(List<String> chunk) {

for(String s : chunk) {

sink.next(s); (2)

}

}

public void processComplete() {

sink.complete(); (3)

}

public void processError(Throwable e) {

sink.error(e); (4)

}

});

});| 1 | 桥接到SingleThreadEventListener API. |

| 2 | 使用next单个侦听器线程将事件推送到接收器. |

| 3 | complete 从同一侦听器线程生成的事件. |

| 4 | error 事件也从同一侦听器线程生成. |

A hybrid push/pull model

Most Reactor operators, like create, follow a hybrid push/pull model.

What we mean by that is that despite most of the processing being asynchronous

(suggesting a push approach), there is a small pull component to it: the

request.

像的大多数Reactor运算符都create遵循混合 推/拉模型。 我们的意思是,尽管大多数处理都是异步的(建议采用推送方法),但其中有一个很小的拉组件:请求。

The consumer pulls data from the source in the sense that it won’t emit anything until first requested. The source pushes data to the consumer whenever it becomes available, but within the bounds of its requested amount.

消费者从源中提取数据,这意味着直到第一次请求它才发出任何东西。 只要有可用,源就会将数据推送到使用者,但要在其请求数量的范围内

Note that push() and create() both allow to set up an onRequest consumer

in order to manage the request amount and to ensure that data is pushed through

the sink only when there is pending request.

请注意,push()并且create()两者都允许设置onRequest使用者以管理请求量,并确保仅在有待处理的请求时才通过接收器推送数据

Flux<String> bridge = Flux.create(sink -> {

myMessageProcessor.register(

new MyMessageListener<String>() {

public void onMessage(List<String> messages) {

for(String s : messages) {

sink.next(s); (3)

}

}

});

sink.onRequest(n -> {

List<String> messages = myMessageProcessor.getHistory(n); (1)

for(String s : message) {

sink.next(s); (2)

}

});

});| 1 | 发出请求时轮询消息. |

| 2 | 如果消息立即可用,请将其推入接收器. |

| 3 | 其余的消息也将在稍后被异步传递到达. |

Cleaning up after push() or create()

Two callbacks, onDispose and onCancel, perform any cleanup on cancellation

or termination. onDispose can be used to perform cleanup when the Flux

completes, errors out, or is cancelled. onCancel can be used to perform any

action specific to cancellation prior to cleanup with onDispose.

两个回调onDispose和onCancel在取消时执行任何清理或终止。 当`Flux` 完成,出错或者被取消时,`onDispose`可用于执行清理。 `onCancel`可用于执行任何在使用onDispose进行清理之前,特定于取消的操作。

Flux<String> bridge = Flux.create(sink -> {

sink.onRequest(n -> channel.poll(n))

.onCancel(() -> channel.cancel()) (1)

.onDispose(() -> channel.close()) (2)

});| 1 | onCancel 首先调用,仅用于取消信号. |

| 2 | onDispose 调用为完成,错误或取消信号而调用. |

4.4.4. Handle

The handle method is a bit different: it is an instance method, meaning that

it is chained on an existing source (as are the common operators). It is present

in both Mono and Flux.

handle方法有点不同:它是一个实例方法,这意味着它被链接在一个现有的源上(常见的运算符也是如此)。它存在于Mono和中Flux。

It is close to generate, in the sense that it uses a SynchronousSink and

only allows one-by-one emissions. However, handle can be used to generate an

arbitrary value out of each source element, possibly skipping some elements. In

this way, it can serve as a combination of map and filter. The signature of

handle is as follows:

它接近generate,从某种意义上说,它使用 SynchronousSink 且仅允许一对一的发射。 但是,handle可用于从每个源元素中生成任意值,可能会跳过某些元素。通过这种方式,它可以作为map和filter组合一样使用。 handle的签名如下:

Flux<R> handle(BiConsumer<T, SynchronousSink<R>>);Let’s consider an example. The reactive streams specification disallows null

values in a sequence. What if you want to perform a map but you want to use

a preexisting method as the map function, and that method sometimes returns null?

让我们考虑一个例子。反应性流规范不允许null值在序列中。如果要执行一个 map方法,但想使用一个预先存在的方法作为map函数,而该方法有时返回null怎么办?

For instance, the following method can be applied safely to a source of integers:

例如,以下方法可以安全地应用于整数源:

public String alphabet(int letterNumber) {

if (letterNumber < 1 || letterNumber > 26) {

return null;

}

int letterIndexAscii = 'A' + letterNumber - 1;

return "" + (char) letterIndexAscii;

}We can then use handle to remove any nulls:

然后,我们可以使用handle删除任何空值:

handle for a "map and eliminate nulls" scenariohandle用于“映射并消除空值”场景

Flux<String> alphabet = Flux.just(-1, 30, 13, 9, 20)

.handle((i, sink) -> {

String letter = alphabet(i); (1)

if (letter != null) (2)

sink.next(letter); (3)

});

alphabet.subscribe(System.out::println);| 1 | 映射到字母. |

| 2 | 如果“ map function”返回null |

| 3 | 通过不调用过滤掉它sink.next. |

Which will print out:

M I T

4.5. Threading and Schedulers

Reactor, like RxJava, can be considered to be concurrency-agnostic. That is, it does not enforce a concurrency model. Rather, it leaves you, the developer, in command. However, that does not prevent the library from helping you with concurrency.

像RxJava一样,Reactor可以被视为与并发无关的。 也就是说,它不强制执行并发模型。相反,它使您(开发人员)处于命令状态。但是,这不会阻止你使用并发库。

Obtaining a Flux or a Mono does not necessarily mean that it runs in a dedicated

Thread. Instead, most operators continue working in the Thread on which the

previous operator executed. Unless specified, the topmost operator (the source)

itself runs on the Thread in which the subscribe() call was made. The following

example runs a Mono in a new thread:

获得 Flux或 Mono不一定意味着它运行在专用的Thread 中。取而代之的是,大多数运算符Thread将在先前的运算符执行时继续工作。 除非另有说明,最上面的操作(源)本身上运行,Thread其在subscribe()有人呼吁。以下示例Mono在新线程中运行:

public static void main(String[] args) throws InterruptedException {

final Mono<String> mono = Mono.just("hello "); (1)

Thread t = new Thread(() -> mono

.map(msg -> msg + "thread ")

.subscribe(v -> (2)

System.out.println(v + Thread.currentThread().getName()) (3)

)

)

t.start();

t.join();

}| 1 | 该Mono<String>组装在线程main. |

| 2 | 但是,它是在thread中订阅的Thread-0. |

| 3 | 因此,map和onNext回调实际上都在Thread-0 |

The preceding code produces the following output:

hello thread Thread-0In Reactor, the execution model and where the execution happens is determined by the

Scheduler that is used. A

Scheduler

has scheduling responsibilities similar to an ExecutorService, but having a

dedicated abstraction lets it do more, notably acting as a clock and enabling

a wider range of implementations (virtual time for tests, trampolining or

immediate scheduling, and so on).

在Reactor中,执行模型以及执行的位置由所Scheduler使用所确定的 。 Scheduler 具有与ExecutorService相似的调度职责,但是具有专用的抽象使其可以做更多的事情, 尤其是充当时钟并支持更广泛的实现(测试的虚拟时间,蹦床或即时调度等)。

The Schedulers

class has static methods that give access to the following execution contexts:

Schedulers 类有给访问以下执行上下文的静态方法:

-

No execution context (

Schedulers.immediate()): at processing time, the submittedRunnablewill be directly executed, effectively running them on the currentThread(can be seen as a "null object" or no-opScheduler). -

A single, reusable thread (

Schedulers.single()). Note that this method reuses the same thread for all callers, until the Scheduler is disposed. If you want a per-call dedicated thread, useSchedulers.newSingle()for each call. -

An unbounded elastic thread pool (

Schedulers.elastic()). This one is no longer preferred with the introduction ofSchedulers.boundedElastic(), as it has a tendency to hide backpressure problems and lead to too many threads (see below). -

A bounded elastic thread pool (

Schedulers.boundedElastic()). Like its predecessorelastic(), it creates new worker pools as needed and reuses idle ones. Worker pools that stay idle for too long (the default is 60s) are also disposed. Unlike itselastic()predecessor, it has a cap on the number of backing threads it can create (default is number of CPU cores x 10). Up to 100 000 tasks submitted after the cap has been reached are enqueued and will be re-scheduled when a thread becomes available (when scheduling with a delay, the delay starts when the thread becomes available). This is a better choice for I/O blocking work.Schedulers.boundedElastic()is a handy way to give a blocking process its own thread so that it does not tie up other resources. See How Do I Wrap a Synchronous, Blocking Call? 如何包装同步阻塞呼叫?, but doesn’t pressure the system too much with new threads. -

A fixed pool of workers that is tuned for parallel work (

Schedulers.parallel()). It creates as many workers as you have CPU cores. -

没有执行上下文(Schedulers.immediate()):在处理时,提交的文件Runnable 将被直接执行,从而有效地在当前Thread上运行(可以视为“空对象”或no-op Scheduler)。

-

单个可重用线程(Schedulers.single())。请注意,此方法对所有调用方都使用相同的线程,直到调度程序被释放为止。如果您需要一个每次调用专用线程,请Schedulers.newSingle()为每个调用使用。

-

无限制的弹性线程池(Schedulers.elastic())。引入时Schedulers.boundedElastic(),不再首选该线程,因为它倾向于隐藏背压问题并导致线程过多(请参见下文)。

-

有界的弹性线程池(Schedulers.boundedElastic())。像其前身一样elastic(),它根据需要创建新的工作池,并重用空闲的工作池。闲置时间过长(默认值为60s)的工作池也将被丢弃。 与之前的elastic()版本不同,它对可以创建的支持线程数进行了限制(默认值为CPU内核数x 10)。达到上限后,最多可提交10万个任务,并在线程可用时重新调度(当延迟调度时,延迟在线程可用时开始)。 这是I / O阻止工作的更好选择。 Schedulers.boundedElastic()是一种为阻塞进程分配自己的线程的简便方法,这样它就不会占用其他资源。请参阅How Do I Wrap a Synchronous, Blocking Call? 如何包装同步阻塞呼叫?,但使用新线程不会对系统造成太大压力。

-

已调整为并行工作的固定工作池(Schedulers.parallel())。它创建的工作线程数量与CPU内核数量一样多。

Additionally, you can create a Scheduler out of any pre-existing ExecutorService by

using Schedulers.fromExecutorService(ExecutorService). (You can also create one from an

Executor, although doing so is discouraged.)

此外,您可以创建一个Scheduler不存在ExecutorService的对象使用Schedulers.fromExecutorService(ExecutorService)。 (Executor尽管不建议这样做,也可以从中创建一个 。)

You can also create new instances of the various scheduler types by using the newXXX

methods. For example, Schedulers.newParallel(yourScheduleName) creates a new parallel

scheduler named yourScheduleName.

您还可以使用这些newXXX 方法来创建各种调度程序类型的新实例。 例如,Schedulers.newParallel(yourScheduleName)创建一个名为 yourScheduleName 的新并行调度程序。

|

While Custom |

|

虽然boundedElastic是帮助制造与传统的阻塞代码,如果无法避免, single而且parallel都没有。因此,使用反应器阻塞的API( ,block(),blockFirst()(blockLast()以及遍历toIterable() 或toStream())的默认单和并行调度器内)导致在IllegalStateException被抛出。 Schedulers通过创建Thread 实现NonBlocking标记接口的实例,也可以将Custom 标记为“仅非阻塞” 。 |

Some operators use a specific scheduler from Schedulers by default (and usually give

you the option of providing a different one). For instance, calling the

Flux.interval(Duration.ofMillis(300)) factory method produces a Flux<Long> that ticks every 300ms.

By default, this is enabled by Schedulers.parallel(). The following line changes the

Scheduler to a new instance similar to Schedulers.single():

某些操作员Schedulers默认情况下使用特定的调度程序(通常会为您提供提供其他调度程序的选项)。 例如,调用 Flux.interval(Duration.ofMillis(300))factory方法会产生一个Flux<Long>每300毫秒滴答一次的滴答声。 默认情况下,通过启用Schedulers.parallel()。以下行将Scheduler更改为类似于Schedulers.single()的新实例:

Flux.interval(Duration.ofMillis(300), Schedulers.newSingle("test"))Reactor offers two means of switching the execution context (or Scheduler) in a

reactive chain: publishOn and subscribeOn. Both take a Scheduler and let you switch

the execution context to that scheduler. But the placement of publishOn in the chain

matters, while the placement of subscribeOn does not. To understand that difference,

you first have to remember that nothing happens until you

subscribe.

Reactor提供了两种在反应式链中切换执行上下文(或 Scheduler)的方式:publishOn和subscribeOn。两者都使用Scheduler,让您将执行上下文切换到该调度程序。 但是publishOn在链中的位置很重要,而在链中的位置subscribeOn并不重要。要了解这种差异,您首先必须记住,nothing happens until yousubscribe。

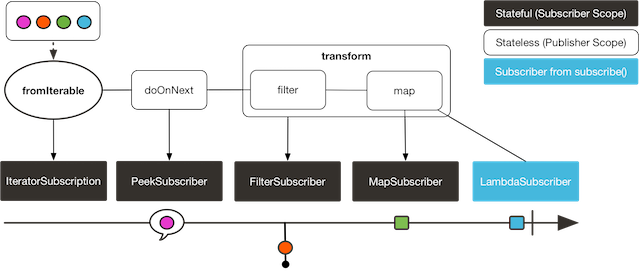

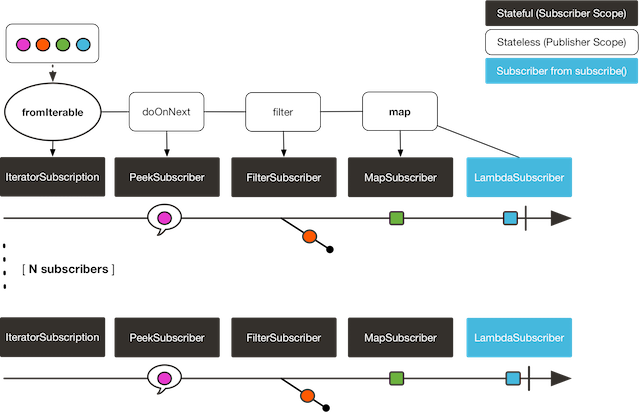

In Reactor, when you chain operators, you can wrap as many Flux and Mono

implementations inside one another as you need. Once you subscribe, a chain of

Subscriber objects is created, backward (up the chain) to the first

publisher. This is effectively hidden from you. All you can see is the outer layer of

Flux (or Mono) and Subscription, but these intermediate operator-specific

subscribers are where the real work happens.

在Reactor中,当您链接运算符时,可以根据需要将许多 实现Flux和Mono实现彼此包装在一起。 订阅后,一个Subscriber对象链将被创建 ,向后(向上)到第一个发布者。 这实际上对您是隐藏的。您所看到的只是Flux(和Mono)和 Subscription的外层,但是这些中间操作员特定的订户才是真正工作的地方。

With that knowledge, we can have a closer look at the publishOn and subscribeOn

operators:

有了这些知识,我们可以更详细地了解publishOnand subscribeOn 运算符

4.5.1. The publishOn Method

publishOn applies in the same way as any other operator, in the middle of the

subscriber chain. It takes signals from upstream and replays them downstream while

executing the callback on a worker from the associated Scheduler. Consequently, it

affects where the subsequent operators execute (until another publishOn is

chained in), as follows:

publishOn在订户链的中间以与任何其他运营商相同的方式应用。它从上游获取信号并在下游重播它们,同时在关联的上对worker执行回调Scheduler。 因此,它 会影响后续运算符的执行位置(直到publishOn链接另一个运算符),如下所示:

-

Changes the execution context to one

Threadpicked by theScheduler -

as per the specification,

onNextcalls happen in sequence, so this uses up a single thread -

unless they work on a specific

Scheduler, operators afterpublishOncontinue execution on that same thread -

将执行上下文更改Thread为由Scheduler

-

根据规范,onNext调用是按顺序发生的,因此这会占用一个线程

-

除非他们在特定的Scheduler,执行publishOn后在同一线程上工作

The following example uses the publishOn method:

Scheduler s = Schedulers.newParallel("parallel-scheduler", 4); (1)

final Flux<String> flux = Flux

.range(1, 2)

.map(i -> 10 + i) (2)

.publishOn(s) (3)

.map(i -> "value " + i); (4)

new Thread(() -> flux.subscribe(System.out::println)); (5)| 1 | 创建一个Scheduler由四个Thread实例支持的新对象。 |

| 2 | 第一个map在<5>中的匿名线程上运行。 |

| 3 | publishOn 切换整个序列到在 Thread<1>中。 |

| 4 | 第二个map在Thread<1> 上运行. |

| 5 | 这匿名Thread是进行订阅的地方。打印发生在最新的执行上下文中,是从publishOn创建出来的 |

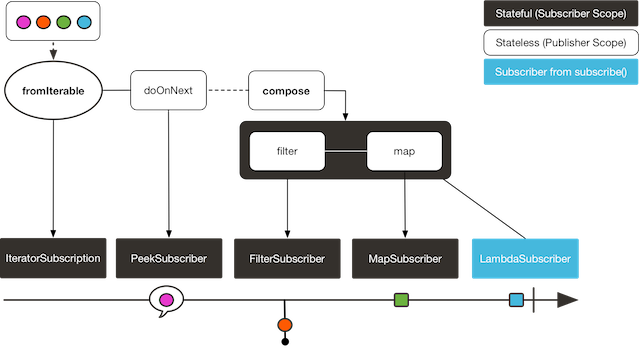

4.5.2. The subscribeOn Method

subscribeOn applies to the subscription process, when that backward chain is

constructed. As a consequence, no matter where you place the subscribeOn in the chain,

it always affects the context of the source emission. However, this does not affect the

behavior of subsequent calls to publishOn — they still switch the execution context for

the part of the chain after them.

subscribeOn当构造了反向链时,适用于订阅过程。因此,无论将其放置subscribeOn在链中的什么位置, 它始终会影响源发射的环境。 但是,这不会影响后续调用的行为,publishOn 它们仍会在其后的部分链中切换执行上下文。

-

从全链的订阅中更改Thread

-

从Scheduler中选择一个线程

Only the earliest subscribeOn call in the chain is actually taken into account.

|

subscribeOn实际上仅考虑链中 最早的呼叫。

The following example uses the subscribeOn method:

Scheduler s = Schedulers.newParallel("parallel-scheduler", 4); (1)

final Flux<String> flux = Flux

.range(1, 2)

.map(i -> 10 + i) (2)

.subscribeOn(s) (3)

.map(i -> "value " + i); (4)

new Thread(() -> flux.subscribe(System.out::println)); (5)| 1 | 创建一个Scheduler由4个Thread。. |

| 2 | 第一个map运行在这四个线程之一上 |

| 3 | 因为 subscribeOn从订阅时间(<5>)的位置,就开始切换了整个序列的线程 |

| 4 | 第二个map也运行在同一线程上. |

| 5 | 匿名Thread是最初发生预订的那个,但subscribeOn立即将其转移到四个调度程序线程之一。 |

4.6. Handling Errors

| For a quick look at the available operators for error handling, see the relevant operator decision tree. |

In Reactive Streams, errors are terminal events. As soon as an error occurs, it stops the

sequence and gets propagated down the chain of operators to the last step, the

Subscriber you defined and its onError method.

在反应式流中,错误是终端事件。一旦发生错误,它就会停止序列,并沿操作链传播到最后一步Subscriber上,你定义的onError方法。

Such errors should still be dealt with at the application level. For instance, you might

display an error notification in a UI or send a meaningful error payload in a REST

endpoint. For this reason, the subscriber’s onError method should always be defined.

此类错误仍应在应用程序级别处理。例如,您可能在UI中显示错误通知,或在REST端点中发送有意义的错误有效负载。 因此,onError应始终定义订户的方法。

If not defined, onError throws an UnsupportedOperationException. You can

further detect and triage it with the Exceptions.isErrorCallbackNotImplemented method.

|

| 如果未定义,则onError抛出UnsupportedOperationException。您可以使用该Exceptions.isErrorCallbackNotImplemented方法进一步对其进行检测和分类。 |

Reactor also offers alternative means of dealing with errors in the middle of the chain, as error-handling operators. The following example shows how to do so:

作为错误处理运算符,Reactor还提供了处理链中间错误的替代方法。以下示例显示了如何执行此操作:

Flux.just(1, 2, 0)

.map(i -> "100 / " + i + " = " + (100 / i)) //this triggers an error with 0

.onErrorReturn("Divided by zero :("); // error handling example

Before you learn about error-handling operators, you must keep in mind that

any error in a reactive sequence is a terminal event. Even if an error-handling

operator is used, it does not let the original sequence continue. Rather, it

converts the onError signal into the start of a new sequence (the fallback one). In

other words, it replaces the terminated sequence upstream of it.

|

| 在学习错误处理运算符之前,必须记住,反应序列中的 任何错误都是终端事件。 即使使用了错误处理运算符,它也不会让原始序列继续。相反,它将onError信号转换为新序列的开始(后备序列)。 换句话说,它将替换其上游的终止序列。 |

Now we can consider each means of error handling one-by-one. When relevant, we make a